Core4ce’s Bio Surveillance Hub and Portal (BSHP) team is working to create a product that allows analysts to seamlessly navigate a wealth of health information. Using the portal, analysts can create and view biothreats in their combatant command region, access resources on various health topics, and review statistical reports that aid in their research.

This year, the BSHP team sought to create an algorithm to suggest related products within the Bio Surveillance Program database. The team wanted to link products – including Threats and Alerts, Knowledge Assets, and Analytics Reports – to help curators more easily find connections. They planned to use a machine learning model to analyze product nodes and find similarities, but they knew that many of the product nodes weren’t fully fleshed out with complete data properties and node relationships.

We spoke with Junior Data Scientist Andrew Schiff to learn more about his role on the project.

Describe the task you were focused on and how you used ChatGPT 3.5 to fulfill the requirement.

I was tasked with generating 2,000 data records – including 1,000 unique Threats and Alerts and 1,000 unique Knowledge Assets – in a specified format with specific data properties to fill the Bio Surveillance knowledge graph to aid in machine learning modelling. With the assistance of AI to create synthetic data, this task became much more manageable. We’ve seen users experiment with a range of inputs with ChatGPT, including both creative and logical queries. I employed ChatGPT 3.5 to be creative in generating synthetic Threat and Alert and Knowledge Asset product data, yet also logical in terms of making sure each data record made sense and was accurate enough to fit a defined format.

What should data scientists keep in mind when using AI to create data records?

There are a few important questions to ask at the outset:

- What data am I providing as input for the AI to refer to?

- What data am I expecting the AI to return?

- What format do I want the data returned in?

- How do I request this data from the AI in the best way possible to ensure I get what I want/expect?

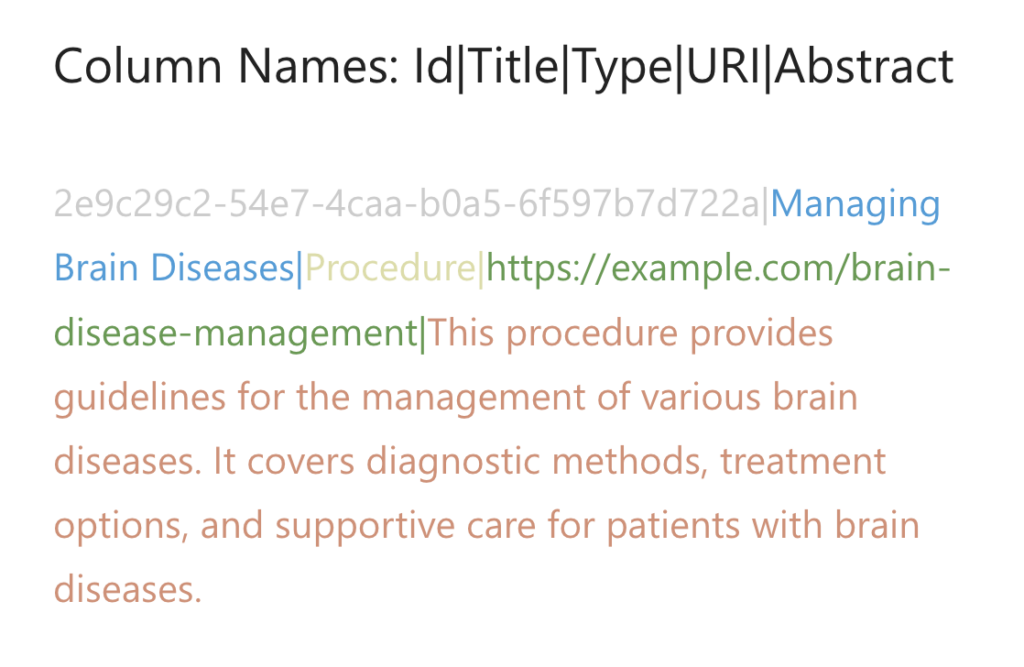

For this exercise, let’s focus on the creation of Knowledge Assets. A sample of an AI-generated product in the finalized format is shown below. The below synthetic data was generated based on the first record of import data regarding brain disease. The column names are provided with each value separated by a “|” and shown in a different colored font.

What data did you provide as input?

Since I want the synthetic data products to be linked to information in the knowledge graph (disease, organization, user, region, …), I provided records from our existing database to use as a reference. I created many variations of Knowledge Assets to ensure variety among the 1000 synthetic records, including assets related to infectious diseases, non-infectious diseases, symptoms, transmission processes, and non-disease related public health topics (ex. food/water safety, natural disasters). These categories were broken down even further by connecting some to a specific geographic region.

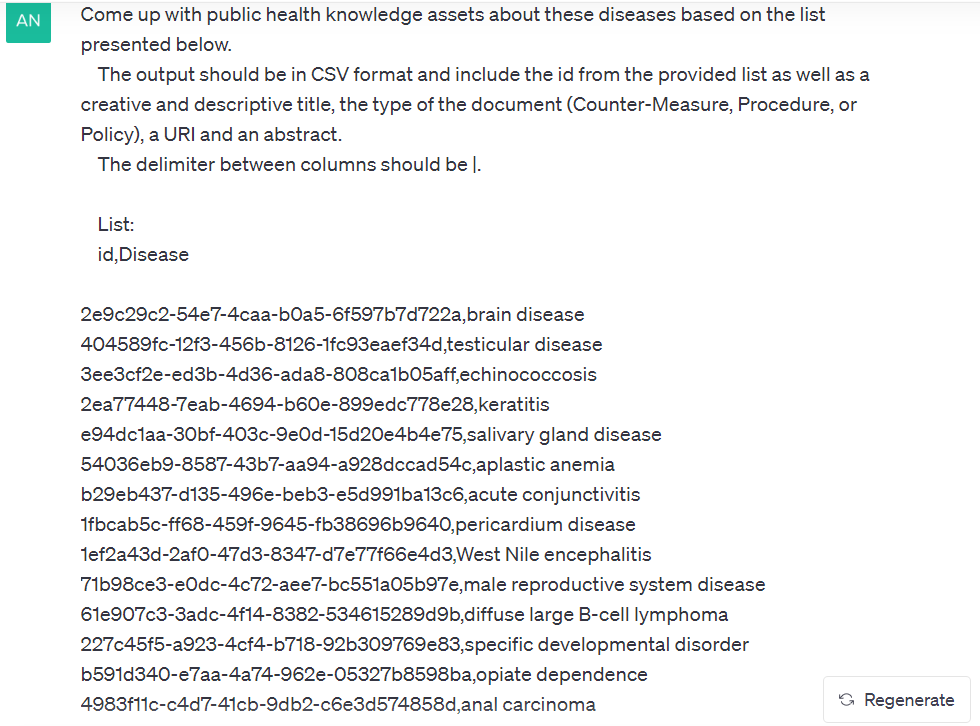

What prompt did you provide to ChatGPT 3.5 to complete the task?

The prompt had to be explicit in explaining what the AI was being given, how that given data should be used, and how I want the results returned. Specifying these features with as much detail as possible made sure the AI returned a result that closely fit my expectations and requirements. If the AI is given too much wiggle room or a vague prompt, it won’t know exactly what the user wants. It will try its best to generate something but may not always hit the mark. After much refinement and testing, below is the prompt structure I ended up using.

Any takeaways from using AI for this task?

AI can be a very powerful tool when it comes to generating data. I thoroughly appreciated the help it was able to provide for this task and plan to use it more in the future to complete projects efficiently. Currently, AI is not perfect, and may not be for some time, which is why human involvement is crucial. For this use case, human-provided prompts along with human verification of the AI-generated results created a perfect harmony between human and machine.